Introduction of VLA

Lead: Martin Chen

Join us this term as the Robotics Society launches a new Vision–Language–Action (VLA) project, led by the society’s VLA project lead.

🤖 What is VLA?

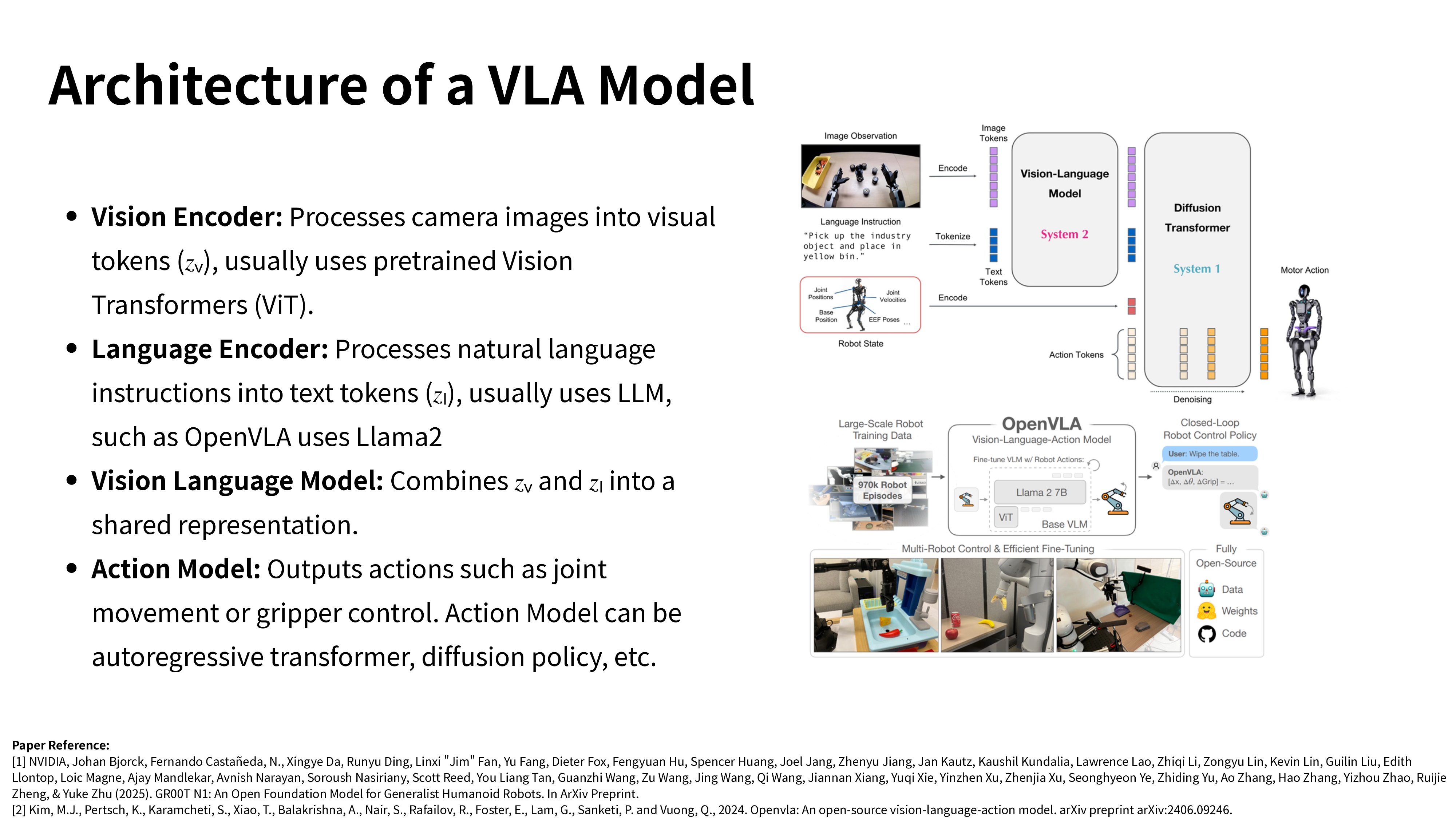

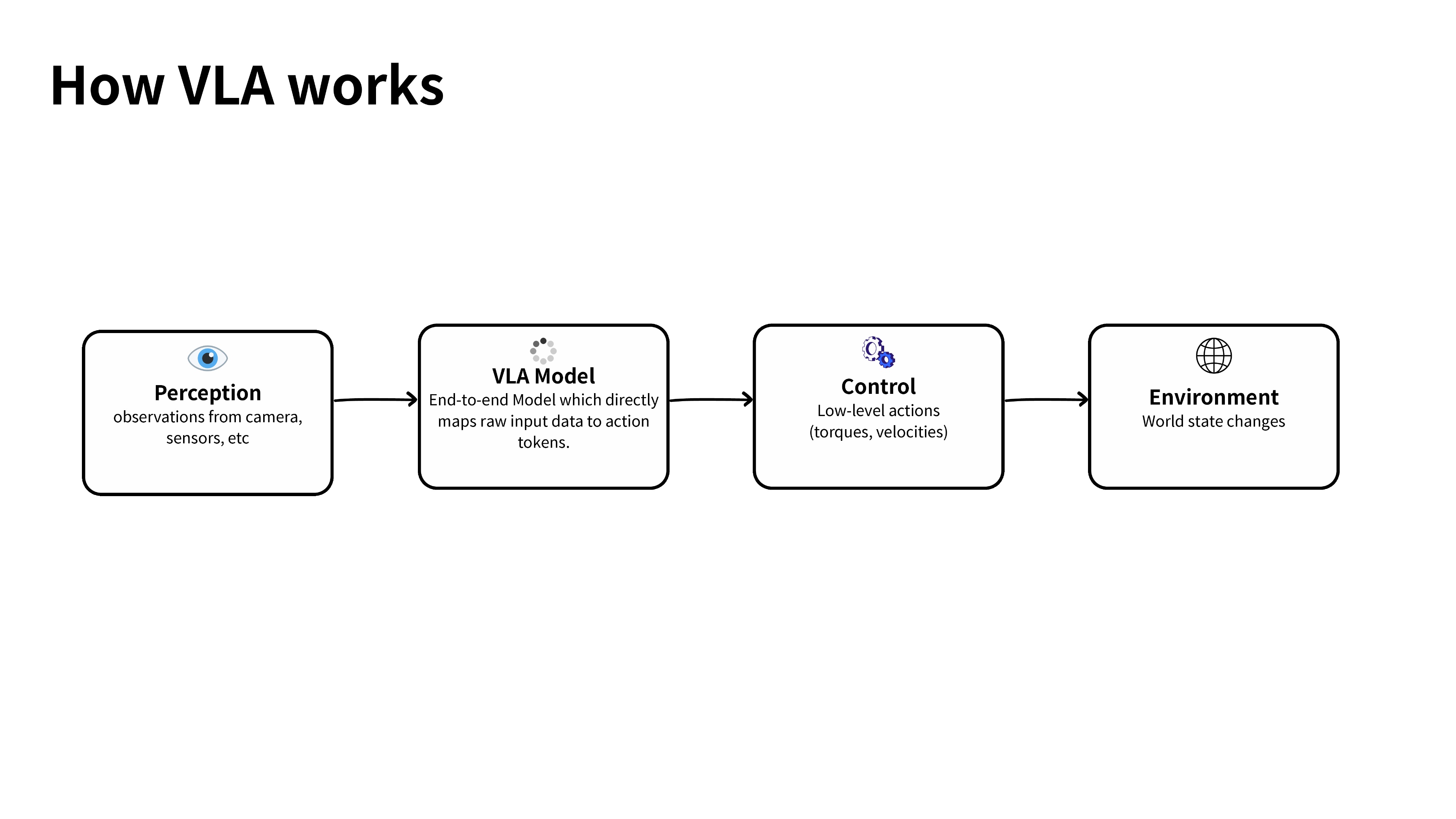

VLA is a modern robotics approach where robots learn to

- 👀 see the world through vision

- 🗣 understand human instructions through language

- 🦾 generate actions in the real world

using learning-based methods instead of hand-crafted rules.

🚀 Why VLA?

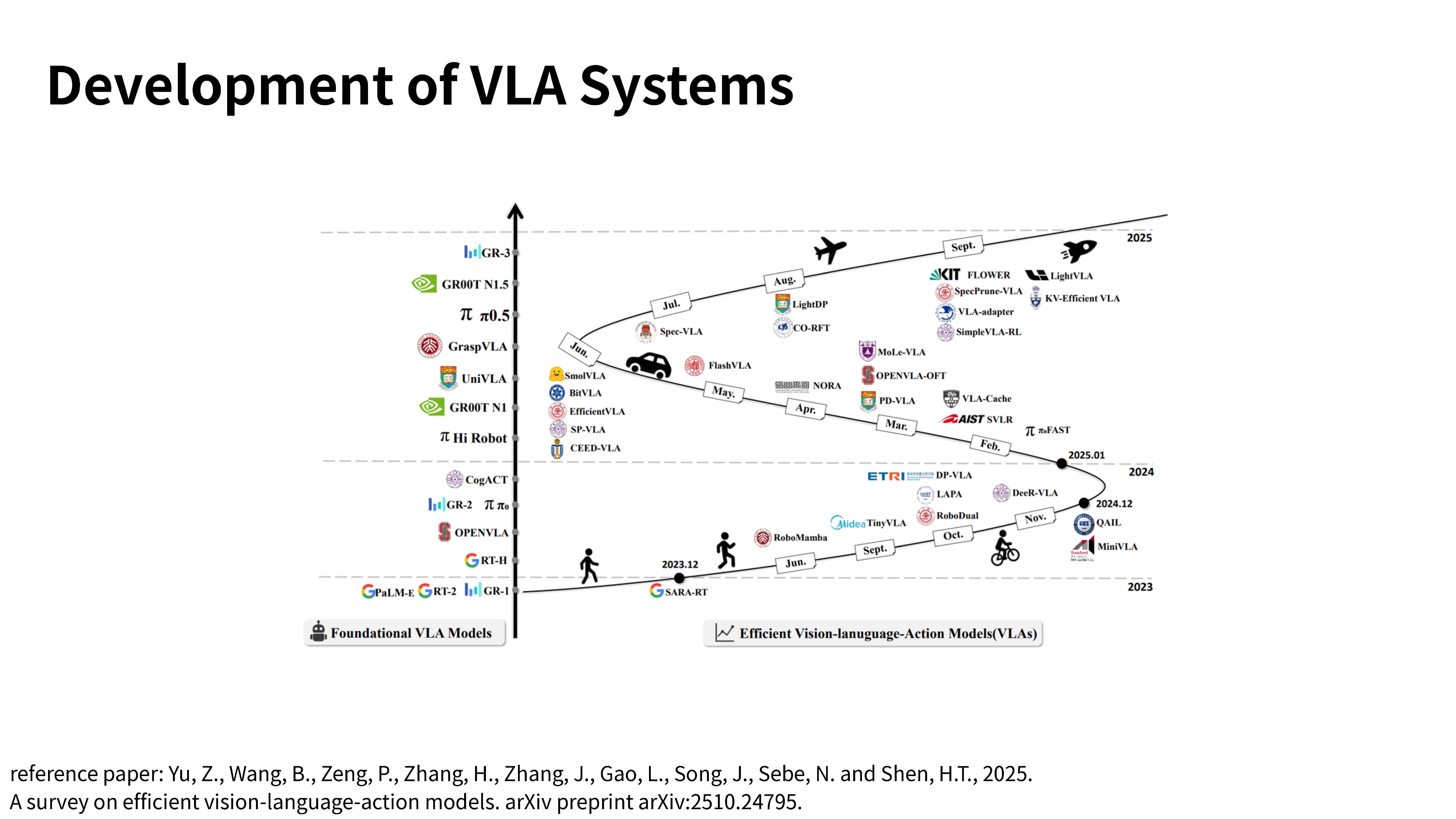

This is the direction modern robotics is moving towards. With recent advances in vision and language models and larger robot datasets, learning-based manipulation has become much more practical and is now widely used in research and industry.

🛠 What we’ll do this term

- Work with real robot datasets

- Train simple imitation policies in simulation

- Break down what a VLA system actually looks like

- Discuss what goes wrong when deploying on real robots (sim2real)

🎯 Goal

The project is designed to be beginner-friendly. By the end of the term, members should understand how a VLA-style policy can be deployed on a real robot, like a LeRobot arm.

More details coming soon, feel free to ask if you’re curious or want to get involved 🤩